Immersive audio in the metaverse

Why immersive audio matters in virtual worlds, and how to implement it.

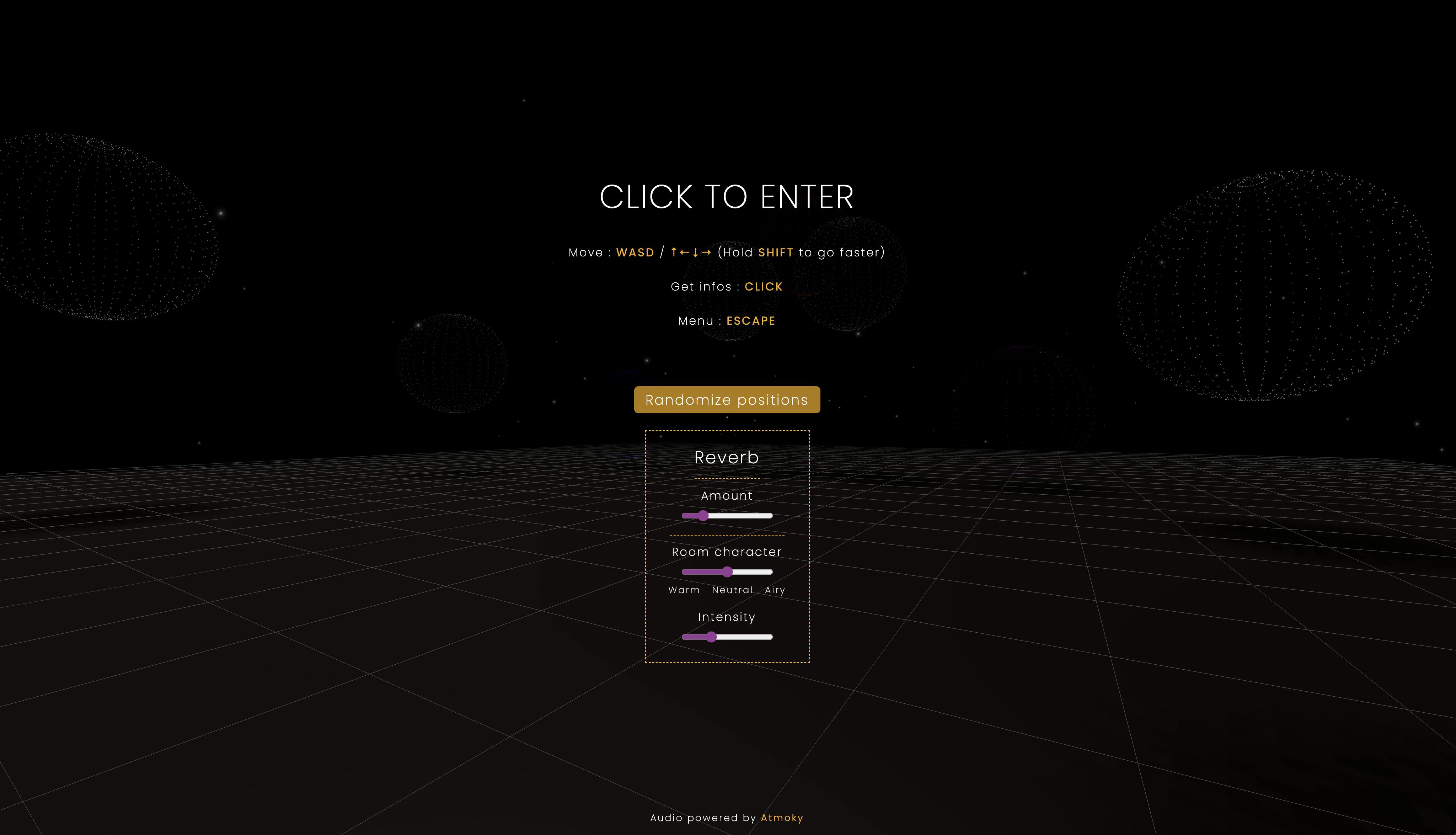

I was fortunate enough to be provided access to the Atmoky Spatial Audio Web SDK demo, which is a solution for integrating immersive audio into Web-based 3D virtual environments. I thought this would be a good opportunity to share my thoughts on immersive audio in the metaverse, and a bit of my experience with this SDK. Let's see why it matters so much - to me, but hopefully to you as well in a little while, then we can discuss the how.

Why is it so important?

First considerations

I'm passionate about virtual worlds - especially in deeply immersive video games - web development and 3D audio. You might have guessed it, this tool is precisely at the crossroad of these, and it is addressing something that, I think, was missing there. Especially with the rapid growth of metaverses, and the increasing concern for accessibility.

Improbable has rightly shown it, through The Otherside successful stress tests, and powered by their M² technology. The next generation of virtual worlds - metaverse(s) or video games - is on the Web.

There are increasingly more tools to build 3D environments on the Web, including Three.js. Some are easy to load and discover, some require more extensive graphic resources. With the support of cloud gaming, this has become significantly more accessible.

Meanwhile, immersive audio continues to grow, but, it is tough to tell the difference between real breakthroughs and marketing campaigns.

Immersive audio solutions for headphones (Binaural) have been investigated for decades (at least). The last few years have witnessed the appearance of 3D soundbars (with sound reflecting off the walls), or dual-speakers that reproduce 3D sound in an impressive way, mostly based on crosstalk cancellation.

While this is becoming more and more accessible, headphone listening is still the most popular and the most affordable.

So, what is the point of immersive audio?

It is colossal, even though it needs to be summarized here.

These concerns have not been neglected in video games. 3D sound can convey information (e.g. Fortnite, sound being an indicator of the location of chests, or enemies), atmosphere, and enhance the consistency & credibility of the space.

Consider this : a Zoom meeting, which is exhausting, and not particularly immersive. Bring it into a virtual space, 2D or 3D, expanded. The participants can move around, switch locations and atmospheres, and have different conversations with different people. Voices are spatialized, separated, more comprehensible. Plenty of people, regular people, have Zoom/Google Meet calls, and are quite familiar with these concerns.

I believe the metaverse should be at the crossroads between a video game and a virtual meeting/call, and it's crucial that immersive audio be part of the development process for such spaces. This would enhance the envelopment of these environments, improve intelligibility in vocal exchanges, extract informative value from sound spatialization. And a lot more.

This is precisely what I have been investigating for the last few years. If you are interested, and if you speak French, here is the link to my paper. It is exploring the applications of immersive audio in virtual worlds, especially in the metaverse. Sorry, it is not available in English yet. For the sake of this research, I built a basic 3D virtual space with Three.js, to integrate immersive audio. I'm still a novice developer, so the code might look messy or inaccurate at some points, but you can check it if you're interested.

Here is a link to the live demo. Please let me know what you think about this (optimization, features, sound spatialization...). And go wild! I've been working a lot on this project, I'd be more than happy to get ANY kind of feedback. :)

I hope you'll be more confident about the value of immersive audio in the metaverse, or at least in virtual worlds. But, what if you want to integrate immersive audio into a virtual environment.

How to integrate immersive audio on the Web?

If you're using a video game engine, such as Unreal Engine or Unity, go for it. There are some 3D sound APIs, such as Steam Audio, that can help you achieve more advanced immersive audio integration than the basic tools. It also provides an API written in C.

If you'd rather use JavaScript - probably for the Web - follow me.

You've most likely already heard of Omnitone, or Resonance-Audio. They are excellent libraries, worth a try. The latter can be integrated with video game engines, and the source code is in C++.

With these, you can definitely integrate 3D, or 360°, audio into a scene. But maybe you'll run into the same problems I had : sound crackling/stuttering when the camera is moving, because of the position of the sound sources struggling to update. The only library I haven't had this problem with is Atmoky. And it is also the only one currently in active development. That's why I want to praise them, and testify about its efficiency and ease of use.

First, let's try to be a little more specific.

What will you need to use?

By immersive audio, we mean 3D (or at least 360°) audio. It is not always mandatory, as you can have an immersive experience without 3D audio, but it is a key feature. And we want to go past the usual left/right spatialization.

Libraries such as Atmoky allow the use of Binaural sound, which is a way for the user to experience a 3D sound sphere around them, with only headphones. This lets you reduce the audio stream to only 2 channels, though it typically requires several channels/speakers.

This effect is mainly achieved through filters that reproduce the way sound objects are perceived, simulating the shape of the head, ears, hair... (e.g. A sound coming from the back will be, roughly, filtered in the high frequencies, because of the resistance of the skull/ears). But there is more!

This set of filters, called HRTF (Head-Related Transfer Functions), can be customized to precisely match your head, ears, etc. This way, with the proper (eventually custom-made) HRTF, you can perfectly and accurately perceive sound in 3D with headphones.

This being discussed, let's get back to the point.

The Atmoky case

With such a library, all this tedious process is integrated, so you can straight away place the sources and the listener on a 2D or 3D grid. You should also be able to customize HRTF with your parameters.

There is a demo provided by the company, which will be more explanatory than any words.

The integration process is pretty straightforward. A lot of the development process is abstracted, so you can focus on what matters. The documentation is clear and comprehensive, and you can quickly get started, as well as use the examples that are provided.

After trying out the demo, I provided some feedback to the team. Their response was swift, and the documentation was updated based on that feedback, which is quite nice to see.

It is very well suited to libraries like Three.js, or any A-frame framework. You just need to set the position & rotation relative to the camera/character (see code sample).

renderer.listener.setPosition(42, 10, 3.1415);

renderer.listener.setRotation(0.2, 0, 0);

If possible, I would recommend using streaming audio, rather than preloading (see code sample).

const createSource = (url, obj) => {

const audioElem = document.createElement('audio');

audioElem.src = url;

audioElem.crossOrigin = 'anonymous';

audioElem.preload = 'none';

audioElem.load();

audioElem.loop = true;

const audioElemSrc = audioContext.createMediaElementSource(audioElem);

let source = renderer.createSource();

source.setInput(audioElemSrc);

source.setPosition(obj.position.x, obj.position.y, obj.position.z);

audioElem.play();

return source;

};

There is not much to add, the best would be to experiment and discover by yourself. You can request a free license, that is restricted to non-commercial and testing purpose.

The documentation is detailed enough, and the team caring enough, to get by!

Special thanks to Clemens who demonstrated the demo, provided access and corresponded with me afterwards.

Thank you for reading me so far. Honestly, I really appreciate it.

Please, if you have any comment, advice, question about this, or if you believe I made a mistake, feel free to contact me. If you want to discuss immersive audio, Web development, the metaverse... Do the same! I'll be happy to chat. You can find me on Twitter (@0xpolarzero), or just email me if you like it better (0xpolarzero@gmail.com).

If you found this post instructive, feel free to share it! You can also find it as a Twitter thread. :)